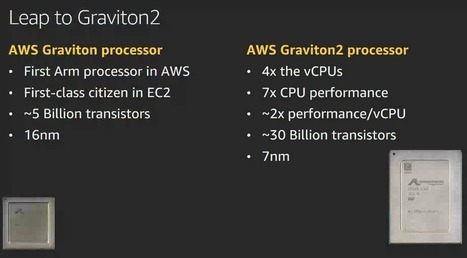

With Graviton2, AWS is making it clear that it is serious about Arm processors in the data center as well as moving cloud infrastructure innovation at its pace.

Amazon Web Services launched its Graviton2 processors, which promise up to 40% better performance from comparable x86-based instances for 20% less. Graviton2, based on the Arm architectuare, may have a big impact on cloud workloads, AWS' cost structure, and Arm in the data center.

Graviton2 was unveiled at AWS' re:Invent 2019 conference and ZDNet was debriefed by the EC2 team in an exclusive. Unlike the Graviton effort and A1 instances unveiled a year ago, Graviton2 ups the ante for processor makers such as Intel and AMD. With Graviton2, AWS is making it clear that it is serious about Arm processors in the data center as well as moving cloud infrastructure innovation at its pace.

"We're going big for our customers and our internal workloads," said Raj Pai, vice president of AWS EC2. AWS is launching new Arm-based versions of Amazon EC2 M, R, and C instance families.

Indeed, Graviton2, which is optimized for cloud-native applications, is based on 64-bit Arm Neoverse cores and a custom system on a chip designed by AWS. Graviton2 boasts 2x faster floating-point performance per core for scientific and high-performance workloads, support for up to 64 virtual CPUs, 25Gbps of networking, and 18Gbps of EBS Bandwidth.

AWS CEO Andy Jassy said the new Graviton2 instances illustrate the benefits of designing your own chips. "We decided that we were going to design chips to give you more capabilities. While lots of companies have been working with x86 for a long time, we wanted to push the price to performance ratio for you," said Jassy during his keynote. Jassy added that Intel and AMD remain key partners to AWS.

Your new post is loading...

Your new post is loading...

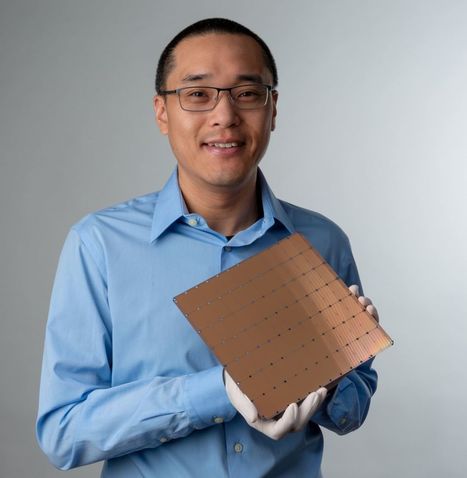

Amazon Web Services is so serious about chip design that it has updated its Graviton ARM processor line along with launching a dedicated Inference Chip.