At its re:Invent conference, AWS today announced the launch of its Inferentia chips, which it initially announced last year. These new chips promise to make inferencing, that is, using the machine learning models you pre-trained earlier, significantly faster and cost effective.

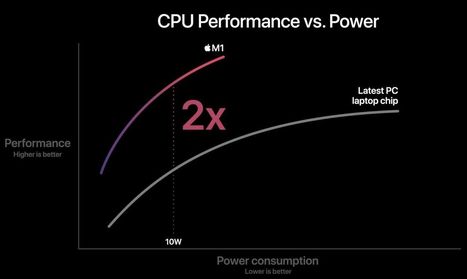

As AWS CEO Andy Jassy noted, a lot of companies are focusing on custom chips that let you train models (though Google and others would surely disagree there). Inferencing tends to work well on regular CPUs, but custom chips are obviously going to be faster. With Inferentia, AWS offers lower latency and three times the throughput at 40% lower cost per inference compared to a regular G4 instance on EC4.

The new Inf1 instances promise up to 2,000 TOPS and feature integrations with TensorFlow, PyTorch and MXNet, as well as the ONNX format for moving models between frameworks. For now, it’s only available in the EC2 compute service, but it will come to AWS’s container services and its SageMaker machine learning service soon, too.

Your new post is loading...

Your new post is loading...

At the negotiation table, US and China are now seated. Europe is still on the menu.