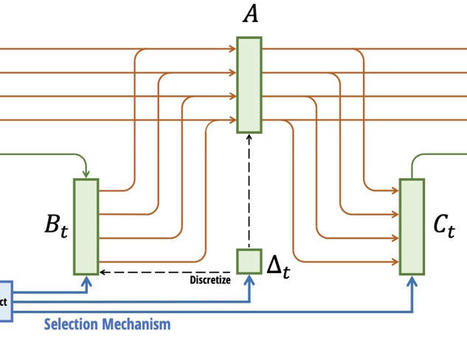

The article discusses the emergence of a non-attention architecture for language modeling, in particular Mamba, which has shown promising results in experimental tests. Mamba is an example of a state-space model (SSM). But what is a state-space model? State-Space Models (SSMs) State-space models (SSMs) are a class of mathematical models used to describe the evolution of […]

Research and publish the best content.

Get Started for FREE

Sign up with Facebook Sign up with X

I don't have a Facebook or a X account

Already have an account: Login

Your new post is loading... Your new post is loading...

Your new post is loading... Your new post is loading...

|

Following on the successes of state space models (S4 and S6), Albert Gu and Tri Dao introduce Mamba (article), a selective SSM which scales linearly with sequence lengths. It achieves state-of-the-art results in language, audio, and genomics compared with same-size and even larger Transformers. Rather than using attention mechanisms for context selection, Mamba relies on state spaces. Unlike previous SSMs, its efficiency and specific GPU design promises speed ups in large scale settings.