Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team The field of natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data.

Research and publish the best content.

Get Started for FREE

Sign up with Facebook Sign up with X

I don't have a Facebook or a X account

Already have an account: Login

Your new post is loading... Your new post is loading...

Your new post is loading... Your new post is loading...

|

|

This blog highlights recent research by Google, Stanford, UNC and DeepMind on the emergent abilities of large language models specifically on arithmetic and word meaning tasks.

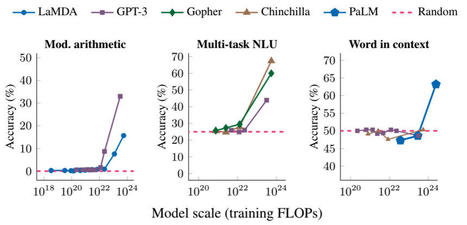

As defined by Wei et al. in their paper, "emergent abilities of large language models as abilities that are not present in smaller-scale models but are present in large-scale models". For example, they observe LLMs such as GPT-3 and PaLM completely fail to learn basic arithmetic tasks until the size of the models reaches 10^22 and we observe a rapid jump in performance.

Comparing implicit models and low scale transformers on very simple arithmetic tasks in my own research, I have also observed transformers poorly extrapolate and therefore fail to learn straightforward additions and multiplications.

I am curious to explore if scalability or multi-step prompting allows for a sudden jump in extrapolation as highlighted in this paper.

More generally, I wonder if we can find new ways to quantify learning to capture the memorization occurring for these tasks prior to the highlighted learning jump.

Will a breakthrough in NLP result in the end of LLM and the beginning of small and as generalizable models? or are LLMs only the start of a much larger beast?